Generative AI–Driven Fraud: Detection, Prevention, and the Future of Risk Management

The nature of fraud has shifted — from rule-based automation to adaptive models capable of learning and evolving. Generative AI has introduced a new layer of complexity — making fraudulent behavior appear more realistic and adaptive to detection systems. For financial institutions, this calls for a shift toward intelligence-driven prevention — systems capable of identifying risk patterns even when user interactions are simulated.

This article explores generative AI fraud in depth – how it works, how to detect it, and what to expect next. It provides strategic guidance and applied frameworks to help organizations address emerging risks through advanced detection technologies.

Generative AI spans a range of tactics and modalities, each leveraging synthetic content to deceive systems or people. Understanding the variants is the first step toward meaningful defense.

One of the most visible categories is deepfake-enabled fraud. Bad actors generate audio, video, or face-swapped impersonations of real persons – celebrities, corporate leaders, or even private individuals – to lend legitimacy to fraudulent appeals.

Attackers can use AI-generated video calls to pose as a senior finance director, instructing employees to approve urgent internal transfers or release confidential client data. The conversations appear authentic in both tone and movement, often supported by deepfake voice models trained on publicly available recordings.

Live deepfake calls add a further twist: attackers can participate in video meetings, voice impersonation included, trying to deceive employees into authorizing illicit transfers or disclosing access credentials.

Generative models (LLMs) have elevated phishing into a more insidious domain. Rather than vague or grammatically awkward attempts, modern phishing messages are context-aware, personalized, and delivered in fluent tone.

At the same time, synthetic identities – complete fabrications of personhood, credentials, and profile histories – are being composed using AI tools and used to pass identity verification or open credit accounts. These are harder to distinguish from “real” applicants than ever before.

Rather than a human operator sending emails or making calls one by one, attackers now deploy LLM-based agents that can plan campaigns, manage dialogues, and respond to users in real time.

Such agents can mimic multi-turn scam calls with realistic persuasion strategies and emotional tone modulation – capabilities that make social engineering scalable.

In many operations, fraudsters mix manual oversight with AI tooling. They may use AI to generate identity scripts or fake biometric data, then insert human intervention where adaptive logic is required. This hybrid structure lets them exploit the strengths of AI while compensating for its weaknesses.

In longer-term scams – called investment grooming or “pig butchering” – fraudsters nurture a target over weeks or months before monetizing.

AI-driven automation allows attackers to sustain long-term fraudulent interactions — simulating trust signals and fabricated confirmations.

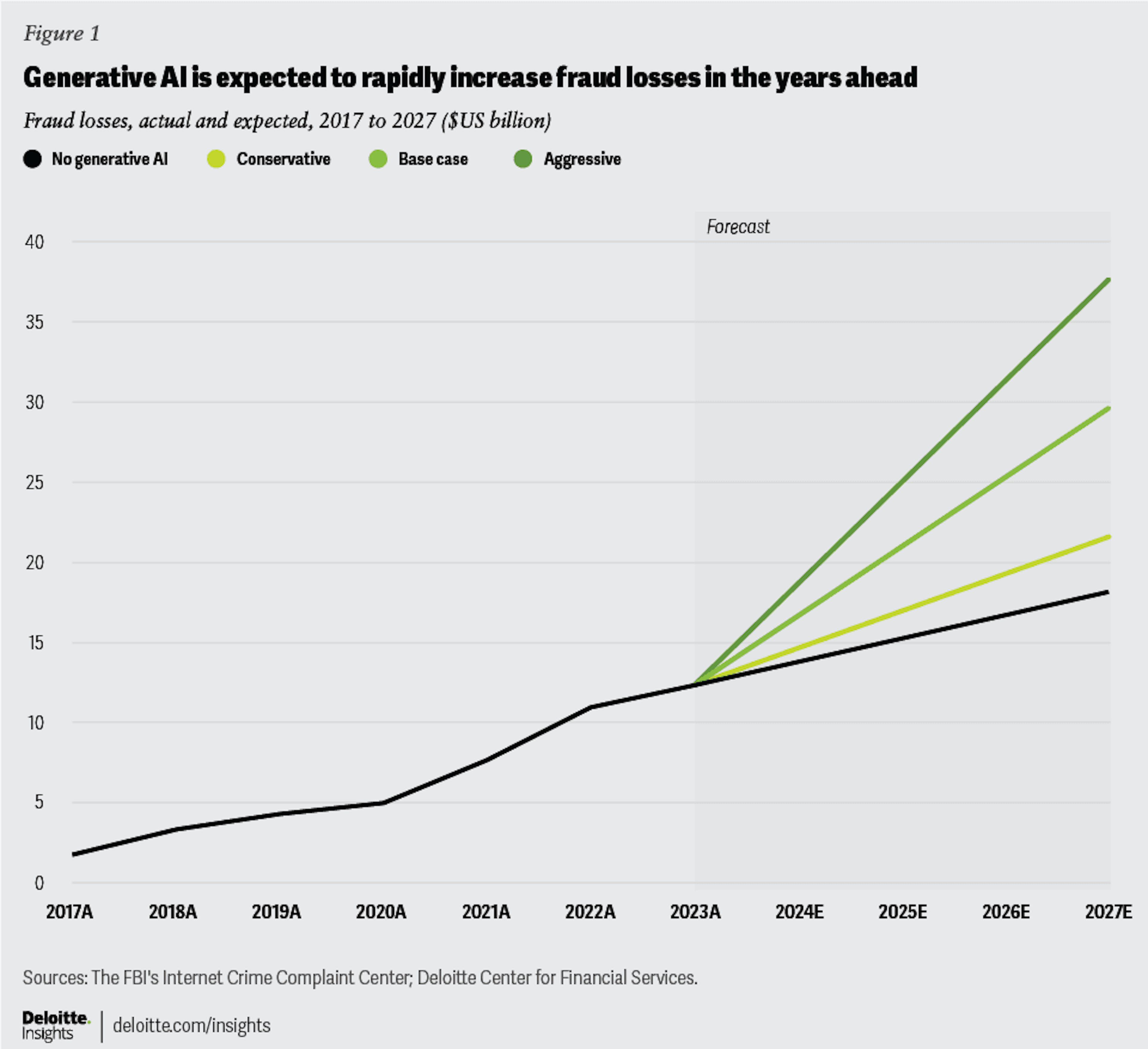

The scale is striking. Generative AI fraud in the U.S. alone is expected to reach $40 billion by 2027, according to the Deloitte Center for Financial Services.

Deepfake fraud attempts were up 3000% in 2023 compared to the previous year. North America has emerged as the main target, with reported deepfake fraud cases surging by approximately 1,740% between 2022 and 2023. The financial damage continues to mount — in just the first quarter of 2025, losses in the region surpassed $200 million. The Asia-Pacific region has seen a similar trend, with a year-over-year increase of about 1,530% in deepfake-related fraud. This sharp growth shows how rapidly generative AI has amplified fraud operations.

Defending against generative AI fraud requires moving beyond static verification and embracing adaptive, data-rich systems. Traditional verification methods such as document scanning or biometric checks have limitations when faced with AI-generated or synthetic identities.

Together, these methods enable financial institutions to recognize not just the data presented on the surface, but the true nature of the entity behind the screen.

Because generative AI can produce synthetic voice, video, image, and text, defenses must evaluate every medium critically and cross-check them:

Generative AI may perfectly reproduce text or visuals – but it struggles to replicate human rhythm and behavioral diversity.

Behavioral analytics help spot this mismatch by analyzing micro-patterns of interaction: cursor movements, scroll velocity, tap pressure, dwell time, or the rhythm of typing and screen transitions.

While behavioral data reveals how an entity acts, device intelligence identifies what it acts from.

This layer is especially powerful against AI-driven and synthetic fraud, where traditional user identifiers are missing or manipulated.

While traditional KYC may be susceptible to manipulation, device intelligence provides a reliable technical layer that reflects genuine behavioral and environmental signals. It becomes the connective tissue that links synthetic patterns across different identities and time frames.

Fraud evolves through imitation; defense must evolve through feedback.

Even with the most advanced automation, expert supervision remains essential.

Analysts review ambiguous cases, oversee escalations, and ensure that decision-making remains transparent and accountable. The strongest defense models combine AI precision with human judgment and clear compliance controls – creating a balance where automation enhances accuracy without replacing responsibility.

Sophisticated fraud tools are being commodified. Deepfake-as-a-service, conversation script generators, and synthetic identity kits are sold openly on dark web markets, lowering the skill barrier for organized crime.

Research like ScamAgent shows LLMs can simulate multi-turn scam calls with persuasive logic. As integration with voice cloning and emotional modulation improves, expect fully autonomous fraud operations.

Attackers will train their models to bypass detectors, prompting defenders to implement adversarial retraining cycles. This “AI vs AI” dynamic will define the next era of fraud prevention.

Expect clearer legal definitions for synthetic identity and AI-based deception, along with transparency requirements, watermarking standards, and audit trail mandates for generative content.

Fraud tactics will increasingly span credit, payments, and insurance ecosystems, forcing closer collaboration between financial institutions, regulators, and technology providers.

Generative AI fraud has transitioned from isolated cases to a measurable and growing challenge across digital ecosystems.

At JuicyScore, we help organizations strengthen risk assessment through privacy-first device intelligence and behavioral analytics that expose hidden patterns no generative model can mask.

Book a demo today to see how our technology identifies synthetic behavior, detects virtualized environments, and enables faster, smarter, and more secure decision-making.

It’s a form of deception where fraudsters use generative models – LLMs, deepfakes, or synthetic identities – to impersonate real people or create fake ones for financial gain.

Increasingly so. AI agents can run simultaneous conversations, adapt to user input, and even manage payment links – making scale a bigger threat than ever.

By combining device intelligence, behavioral analytics, and media forensics – checking not just what a user shows, but how and from where they act.

Because it reveals the real environment behind an interaction. Even if an AI-generated persona looks and sounds legitimate, the device fingerprint, system signals, or session continuity often expose it as synthetic or virtualized.

Micro-level human behavior – cursor movements, typing rhythm, hesitation, context switching – that generative systems can’t authentically reproduce.

Explore how device intelligence improves fraud detection, credit scoring, and onboarding – with real-time analysis and privacy-first design.

Discover how digital microfinance lenders can detect fraud in real time, reduce risks, and protect both portfolios and borrowers using advanced device intelligence, behavioral analysis, and more.

Learn how ML-based solutions help to detect and prevent payment fraud.

Get a live session with our specialist who will show how your business can detect fraud attempts in real time.

Learn how unique device fingerprints help you link returning users and separate real customers from fraudsters.

Get insights into the main fraud tactics targeting your market — and see how to block them.

Phone:+971 50 371 9151

Email:sales@juicyscore.ai

Our dedicated experts will reach out to you promptly